Art of AI: Will AI art generators displace human creativity?

Can art created by artificial intelligence blend in with real artists’ pieces? Learn the ins and outs of AI art generators and how they fit into our world.

Joanna Konieczna

Jan 12, 2023 | 24 min read

A few weeks ago, a discussion arose in our company about whether it’s ok or not to award the first prize in an art competition to an image generated by artificial intelligence (AI). Let's bring this situation closer - last summer, there was a Colorado State Fair fine art competition and the first prize was awarded to Jason Allen for his painting "Théâtre D'opéra Spatial''. The snag here though is that this painting was not painted by Mr. Allen, but generated using the Midjourney AI art generator.

After a picture of the grand prize blue ribbon next to the winning image was posted on the Midjourney Discord chat, Internet users did not fail to express their opinions (very strongly, most often).

After a picture of the grand prize blue ribbon next to the winning image was posted on the Midjourney Discord chat, Internet users did not fail to express their opinions (very strongly, most often).

“We’re watching the death of artistry unfold right before our eyes — if creative jobs aren’t safe from machines, then even high-skilled jobs are in danger of becoming obsolete,” one Twitter user wrote.

“This is so gross,” another wrote. “I can see how AI art can be beneficial, but claiming you’re an artist by generating one? Absolutely not.”

Mr. Allen was not distressed by his critics, explaining that he created hundreds of images using various prompts and spent many weeks polishing his work before deciding to transfer it to canvas and enter it into the competition. If you want to get a closer look at the perspective of the author of this controversial work, I recommend you read the New York Times article, which outlines Mr. Allen's approach to AI generated art and his concerns about the future of art.

Our MasterBorn discussion (MasterDiscussion?) was not as heated and emotional as the one on Twitter, but let's be honest - not many discussions match their fiery tempers like the ones on Twitter. However, this topic inspired us so much that we decided to take a closer look at the subject in this article.

AI painting generators as a computer vision application

AI art generators are examples of cutting-edge artificial intelligence algorithms, the most popular engines have been developed over the past few years and are gaining incredible popularity not only in the IT world but also among artists, designers, and ordinary people. One of the reasons AI art is so widely popular is the simplicity of using such generators. Thanks to built-in text to image converters, we can give the computer a command of what to paint using everyday human language. Such commands are called prompts and if using appropriately chosen words in our prompts, we are able to enhance and match the generated images to our imaginations.

AI art generators are examples of cutting-edge artificial intelligence algorithms, the most popular engines have been developed over the past few years and are gaining incredible popularity not only in the IT world but also among artists, designers, and ordinary people. One of the reasons AI art is so widely popular is the simplicity of using such generators. Thanks to built-in text to image converters, we can give the computer a command of what to paint using everyday human language. Such commands are called prompts and if using appropriately chosen words in our prompts, we are able to enhance and match the generated images to our imaginations.

AI image generators are part of a field of AI called computer vision (CV). What is computer vision? Well, it's a field that focuses on replicating the complexity of our human visual system and the way we experience the world. This means that a computer should not only have the ability to see images (i.e., the equivalent of our human eyesight), but should also be able to mimic our perception, and thus have the ability to understand what it sees.

There are many different computer vision applications, starting with the more "responsible and serious" ones like detecting skin cancer from photos (check out how the US startup Etta Epidermis does it). You can find more information about it and other startups working for a better tomorrow in my previous article. Another example worth mentioning is the use of algorithms that recognize elements within a "computer's sight" in autonomous cars, where the computer has to constantly analyze the situation around it, read traffic signs, watch out for pedestrians and other traffic participants, etc. Other examples of computer vision applications include the detection of people wanted by police on city cameras, as well as all kinds of industrial applications related to IoT and the fourth industrial revolution.

However, computer vision has another, more entertaining face! There are many less responsible uses of CV, serving mainly for our entertainment, such as virtual reality applications and games, filters on Instagram or TikTok, and just creating art, which is our main topic in this article.

How is it possible for AI to generate completely new images?

The creation of completely new images not seen before by artificial intelligence is possible thanks to so-called generative models. This is a group of models that generate new data instances, while traditional discriminative models are designed to distinguish between different types of data instances. Take, for example, a collection of cats and dogs. A discriminative model that gets a new image of an animal will be able to determine whether it is a cat or a dog based on the information collected about these species so far. A generative model, on the other hand, needs to learn the full range of characteristics dogs and cats have, so that it will be able to generate a picture of a completely new cat or dog when asked to do so.

To put it a bit more formally, generative models deal with modeling the cumulative distribution of data, while discriminative models deal with the probability of obtaining a given label under the condition of observing certain characteristics. This means that generative models have a much more difficult task and have to "learn more". This is possible (and thus it is possible to generate something that is not a copy of something existing) thanks to three elements:

To put it a bit more formally, generative models deal with modeling the cumulative distribution of data, while discriminative models deal with the probability of obtaining a given label under the condition of observing certain characteristics. This means that generative models have a much more difficult task and have to "learn more". This is possible (and thus it is possible to generate something that is not a copy of something existing) thanks to three elements:

-

High computing power - something that used to be available only to NASA, but today can be bought by the minute

-

Understanding human intent - not just through programming, but understanding natural language

-

Tailored models - algorithms capable of continuous improvement, acquiring the ability to recognize and interpret images

The general concept of learning models that generate images is as follows: we need to provide the computer with a great deal of data in the form of image+caption. Most often, such data comes from what we upload to the Internet. Then, in the process of training the model, not only does it build connections between the image and its caption, but also relationships between different objects. In this way, we get a space of named objects and the relationships between them, which we will then use to generate new images based on prompts.

What can go wrong? Threats lurking in the data

Artificial intelligence, and therefore image-generating models, actually build complex mathematical relationships based on the data we provide them. The models take on our human flaws, prejudices and social problems such as racial or gender discrimination, violence, and hate speech. It's up to us humans to make sure the model doesn't "misbehave." What problems might models encounter in our case?

-

Incorrectly labeled photos and images at the learning stage - If there are enough photos of airplanes signed as cars in our data, our model will stop distinguishing between them. There is also a possibility of deliberate tampering with the model using incorrectly labeled data.

-

The use of data depicting violence, hate, pornography, etc. - There is a risk here that models will produce content that is inappropriate for younger users or considered illegal. An additional aspect is the possibility of placing real people in such images in order to discredit a person or accuse them of something they have not done. Image-generating models can thus be used to spread deep fakes and manipulate information, e.g. political, which can be dangerous for both individuals and society as a whole.

-

Using copyrighted data - The most popular database for learning such models contains many proprietary images, as it simply contains millions of image+caption pairs scraped from the Internet. For example, so many images are downloaded from sites like GettyImages and Shutterstock that some models directly reproduce their watermarks in images. This can work against real authors such as professional photographers or artists who have worked for years creating their own unique style.

Ongoing efforts to make AI systems secured

Can we remedy this somehow? Companies developing AI image generators are constantly working to ensure the safety of users and resolve possible legal issues. One approach is to filter the data provided to teach models. For this purpose, additional models are built to evaluate which content is inappropriate, however, bearing in mind the hundreds of millions of pieces of data on which the models are trained, let's note that this is a time-consuming and therefore costly approach - we must have images previously evaluated by humans as inappropriate.

On the other hand, we can try to control the number and age of our users. This is done by limiting access to the generators of most companies, where the user must first fill out the appropriate application, wait for approval and only after that, gets access to the generator, which in addition is paid. However, not all companies go this route. For example, in order to use the StableDiffusion generator, which we'll talk about later, all you have to do is accept the terms and conditions and download the appropriate library, which makes it virtually impossible to control what the user does with the model.

Still another idea is to check prompts sent by users and block them. Sadly, this is only possible when using the model on a global server and not downloading it to your own computer. For example, the DALL-E generator contains a content filter, and prompts containing certain keywords that are blocked, though users are constantly looking for workarounds for this as well.

In summary, image generator developers and their users are playing cat and mouse with each other, with some coming up with better and better security features and others trying to get around them. I recommend a great article in The Verge to learn more. I think it's the same with AI image generators as with any other invention, it's neutral in itself, but there will always be people who want to use it in a bad way....

Breakthrough in image generation - Generative Adversarial Networks

Generative Adversarial Networks, or GANs for short, is a type of neural network architecture that has sparked a revolution in generative models. How do people come up with such groundbreaking ideas, you ask? Well, some get apples falling on their heads and others have a drink at the bar with their friends.

How to encourage neural networks to create art?

In 2014, a deep learning scientist named Ian Goodfellow went for a beer with friends at one of Montreal's bars. Apparently, they didn't have any hot gossip to talk about, as their conversation turned to how artificial intelligence could be used to create realistic images. Goodfellow's friends noted that neural networks were very good at recognizing and classifying objects in pictures, but not at creating them. Our brains work very similarly. It is easy for us to judge and classify pictures of landscapes or human faces, while it can be much more difficult for us to create a photorealistic reconstruction of a landscape or face. Inspired by these thoughts, Mr. Goodfellow returned home and began thinking about a solution for training neural networks to generate new images, not just classify existing ones. That same evening, he came up with an idea brilliant in its simplicity involving a "battle" between two neural networks, one of which "falsifies" images, while the other recognizes the "falsifications" of the first.

Over the following weeks, with the help of top researchers at the Université de Montréal, Ian Goodfellow improved and described his work, which he published under the name Generative Adversarial Nets. Over the next few years, GANs became an absolute blockbuster in the AI world and led to many innovations in the field of artificial intelligence resulting in Mr. Goodfellow getting a great job at Google at the age of 33 followed by a position leading teams of researchers for companies such as Elon Musk's OpenAI and Apple. And now, he’s supporting the powerful AI lab that is DeepMind. As time goes on, I believe we will hear this man’s name more and more in the context of groundbreaking work in AI. He is no doubt an icon for many aspiring programmers.

Step by step to AI generated art

Nevertheless, let's return to the GANs themselves. As we already know, the innovation of this model lies in the use of two independent neural networks like players in a game. The first network is called the generator and is tasked with creating "fake" images. The second player is a network called the discriminator and it's tasked with detecting "fake" images. In the beginning, our players are both very bad at the game, though during training, both networks learn to perform better and better. Personally, I like to compare the generator and discriminator to two children learning more and more things together.

Let's take a look at an illustration of the GAN architecture. The generator gets a set of random numbers as input and creates new (synthetic) images from them, without ever having seen the real data before. The discriminator knows what the real data looks like and it evaluates the artificial image created by the generator and classifies the image as real or synthetic. If the generator produces "fakes" that are too weak, the process is repeated. The generator learns to falsify the data better and better as the discriminator learns to recognize the synthetic images. The game ends when the generator produces such good images that the discriminator recognizes them as real. This will ensure that the generator is able to generate on-demand images that are very realistic.

Let's take a look at an illustration of the GAN architecture. The generator gets a set of random numbers as input and creates new (synthetic) images from them, without ever having seen the real data before. The discriminator knows what the real data looks like and it evaluates the artificial image created by the generator and classifies the image as real or synthetic. If the generator produces "fakes" that are too weak, the process is repeated. The generator learns to falsify the data better and better as the discriminator learns to recognize the synthetic images. The game ends when the generator produces such good images that the discriminator recognizes them as real. This will ensure that the generator is able to generate on-demand images that are very realistic.

How do today's text-to-image models work?

Most of today's AI art generators are based on something called diffusion models. Like Generative Adversarial Networks, these are generative models, yet the principle behind them differs significantly. Unlike GANs, which learn by creating better and better images from scratch, diffusion models start by destroying training data by successively adding Gaussian noise, and then learning to recover the data by reversing this noising process.

During training, we show many samples with a given distribution. Let’s take the example of many images of churches. After training, we can use the Diffusion Model to generate new images of churches by simply passing randomly sampled noise through the learned denoising process (have a look at the image below).

In this article we won’t delve into the mathematical background of diffusion models, but if you have the desire to learn more, I encourage you to read this article which presents the principles of diffusion models in a clear and understandable way.

In this article we won’t delve into the mathematical background of diffusion models, but if you have the desire to learn more, I encourage you to read this article which presents the principles of diffusion models in a clear and understandable way.

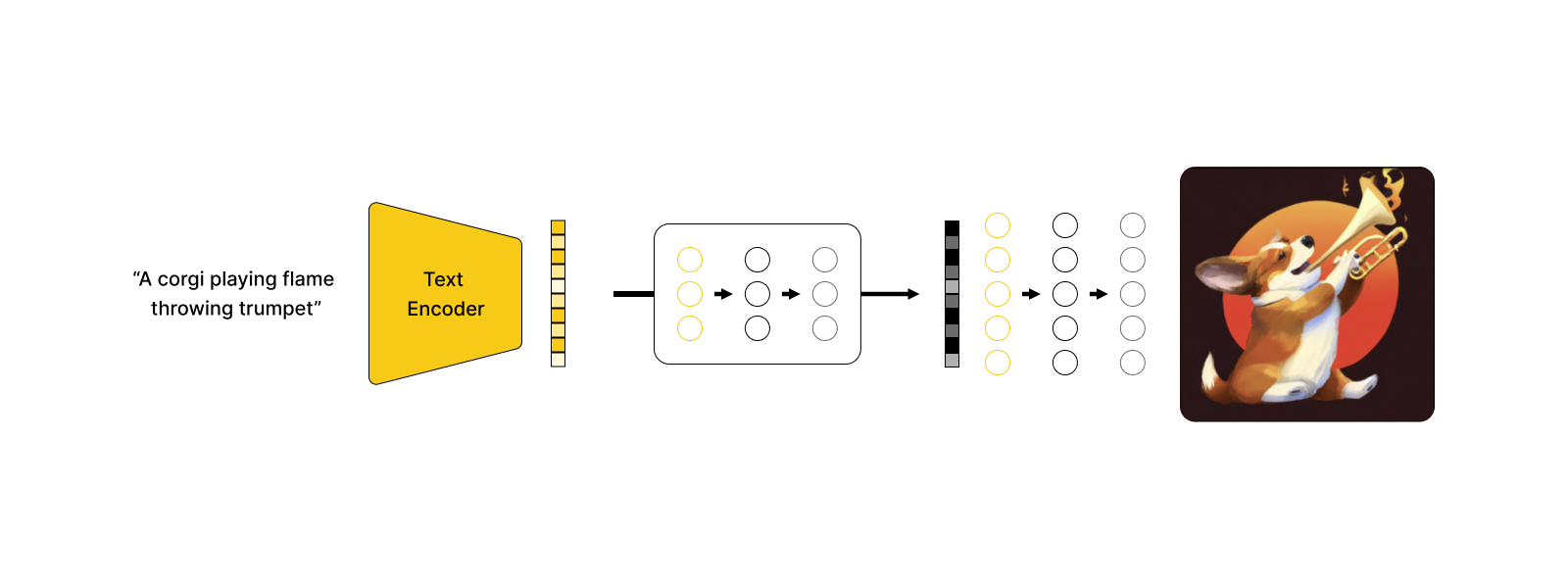

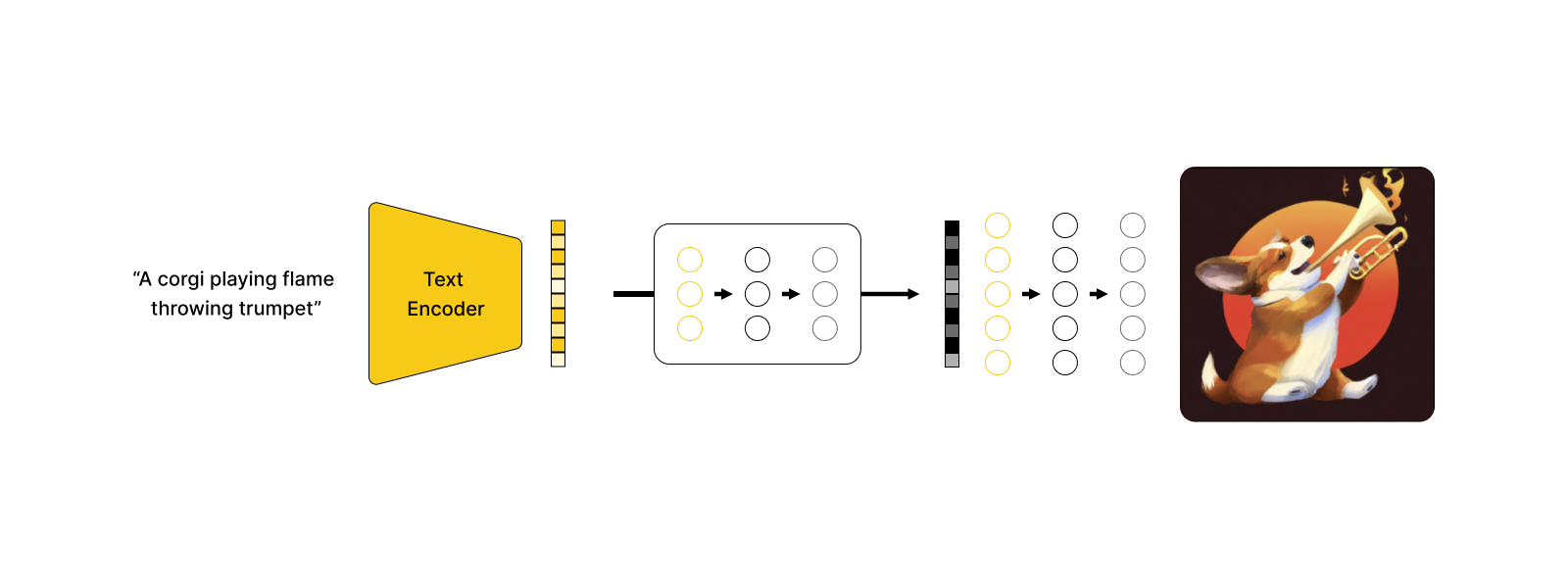

We already know what the process of generating new images looks like in modern AI art generators, but in order for us to use them as we do now, we need to compose three elements:

-

First, the prompt text is passed to the text encoder, which is trained to transfer the text to the vector representation space (embedding space).

-

Then, it’s pushed to a model called prior where the vector representation of the text is translated into a corresponding vector representation of the image, so that the semantic information of the prompt is preserved.

-

Finally, the image decoder stochastically generates an image that is a visual manifestation of the prompt's semantic information.

Stay updated

Get informed about the most interesting MasterBorn news.

3 AI image generators worth your attention

Now that we know how typing in prompt words yields completely new images, it's time to get acquainted with sample AI image generators. I have selected three that I consider particularly noteworthy because of their position in the market, their constantly growing popularity, the diversity in the results generated, and the business approach of the owners.

Midjourney

By: Independent research team led by David Holz (Leap Motion co-founder).

Tech details: The developer does not provide any information about the model's architecture or training data.

Usage: The Midjourney bot can be used from a Discord server https://discord.gg/midjourney, as well as on any other Discord server with the bot configured. The first 25 prompts are free, after that you need to purchase one of the available plans. It is worth noting that while using the Discord bot, our prompts and their results are public and can be seen by other users.

Key features: Midjourney engine features an "analog" style of images. It does not create typical digital images. In the image that inspired me to write this article, the winning image 'Théâtre D'opéra Spatial' and other images generated by Midjourney, we can see a kind of darkness and drama. Very often the characters in the generated images do not have faces, but only strange creations resembling them. This generator is particularly sensitive to the word "art" in the prompt - you can try it for yourself. In my opinion, the Midjourney generator is able to generate images similar in style, and “vibe” to the paintings of one of the most outstanding Polish artists Zdzisław Beksiński, whose works I adore.

DALL-E 2

By: OpenAI, founded in 2015 by Elon Musk, Sam Altman and 4 other researchers.

Tech details: DALL-E 2 uses a diffusion model conditioned on CLIP image embeddings, which are generated from CLIP text embeddings by the prior model. DALL-E 2 has 3.5 billion parameters, 8.5 billion fewer than the earlier DALL-E 1.

Usage: The model is used through the DALL-E server and you need to sign up for the waitlist. Accepted users get free credits for prompts every month and if they need more, they can buy additional ones.

Key features: The DALL-E 2 engine produces very realistic results, often looking like photographs. In addition, it delivers very high resolution results, which further enhances the impression of photos. DALL-E 2 is the only one of the models in question to offer an inpainting option, allowing you to edit a fragment of an existing image, for example, to "expand" existing artwork such as the Mona Lisa or others.

Stable Diffusion

By: Stability AI, CompVis and supporting companies

Tech details: This model is based on the stable diffusion algorithm and the code is completely public with all details in a repository. It was trained for 150k GPU-hours, at a total cost of $600,000 and written in Python.

Usage: The model was published on HuggingFace from where it can be used by the diffusers library. So there is no waiting list, registration or price list. You just need to have a free account on HuggingFace and agree to the model's terms and conditions.

Key features: Stable Diffusion similarly to DALL-E is able to generate very realistic results. Unlike the previous generators discussed, we are able to replicate the results of the model, as well as create new images very close to the previous one by being able to manually task the initial grain in the diffusion algorithm. We use the Stable Diffusion model on our own machine as a python library. That way we don't have a global server serving all the prompts, which can positively affect the time of obtaining results, but also leaves users without any supervision. And as we all know from the previous part of the article, that can lead to dangerous misuse.

I encourage you to test the three described AI art generators yourself and compare the results obtained from the same prompts. However, if you do not have the time or opportunity, it’s worth taking a look at an article from PetaPixel that compares several side-by-side results. As I mentioned earlier, the images generated by Midjourney are distinguished by their analogue quality, as well as their darker feel. That being said, they are not as realistic as those generated by DALL-E 2 or Stable Diffusion.

I encourage you to test the three described AI art generators yourself and compare the results obtained from the same prompts. However, if you do not have the time or opportunity, it’s worth taking a look at an article from PetaPixel that compares several side-by-side results. As I mentioned earlier, the images generated by Midjourney are distinguished by their analogue quality, as well as their darker feel. That being said, they are not as realistic as those generated by DALL-E 2 or Stable Diffusion.

The differences in the results of the three models are primarily due to the differences in the architecture of the mathematical models themselves, as well as the training data given, length of training, enhancements applied, etc. Let's also note the difference in the business approach of model developers, each taking a different path to distribute their model to users. Keeping the architecture secret also differs. The developers of Midjourney do not provide any technical details about their model, while DALL-E 2 gives us some general facts and the developers of Stable Diffusion have opened their code to all interested parties. There is no doubt we will see a rash of yet other AI art generators in the near future, and I look forward to hearing the newest results.

Creativity or plagiarism

Now that we know the technological background of the art of AI, let's try to look at this topic from a slightly different, more philosophical point of view. I encourage you to do a creativity exercise by asking yourself what you think creativity is? In the daily work of software houses like MasterBorn, creativity is extremely important, and to a similar degree, having people on the team who can imbue creativity into the most valuable products. Together with Janusz Magoński, our Product Designer, we asked ourselves this intriguing question: what is creativity? Where are the limits of originality, and where does plagiarism begin.

Noted English author William Ralph Inge answers our question in this way: "What is originality? Undetected plagiarism." Honestly, it's hard to disagree with him. After all, each of us has been observing the world around us since childhood, reading books, looking at paintings, photos or movies, listening to music, listening to the sounds of the street, houses, and nature. Whether we want it or not, we absorb enormous amounts of data. Everything we create will therefore be a kind of mixture of our past experiences, observations, and memories. Creativity is kind of a "connecting of the dots" of existing things in a way that no one has done before. As Janusz pointed out, this is particularly well illustrated by the existence of different painting trends or genres of music, where by definition there is a certain repetition and common elements. And yet, we are still able to delight in a new blues piece, even though it is built on the same scale as hundreds of previous other compositions.

Noted English author William Ralph Inge answers our question in this way: "What is originality? Undetected plagiarism." Honestly, it's hard to disagree with him. After all, each of us has been observing the world around us since childhood, reading books, looking at paintings, photos or movies, listening to music, listening to the sounds of the street, houses, and nature. Whether we want it or not, we absorb enormous amounts of data. Everything we create will therefore be a kind of mixture of our past experiences, observations, and memories. Creativity is kind of a "connecting of the dots" of existing things in a way that no one has done before. As Janusz pointed out, this is particularly well illustrated by the existence of different painting trends or genres of music, where by definition there is a certain repetition and common elements. And yet, we are still able to delight in a new blues piece, even though it is built on the same scale as hundreds of previous other compositions.

The main goal of AI researchers is to make algorithms at least somewhat similar to our human brains. And just as we learn everything by observing the world, AI models learn by observing data. The difference, in my opinion, is that likely only a few human artists would spend many years studying all of Rembrandt's paintings in order to learn his style perfectly, while for artificial intelligence this is not a problem at all and would probably take only a few minutes.

So, it is natural to ask - is using AI in the creative process therefore a cheat? Interestingly, very similar thoughts were held by artists after 1839, when the first references to photography circulated the world. One Dutch periodical published a letter warning of "an invention (...) which may cause some anxiety to our Dutch painters. A method has been found by which sunlight is elevated to the rank of drawing master, and a faithful representation of nature becomes the work of a few minutes." Sounds familiar, doesn't it? Despite initial concerns about replacing painters with photographs, nothing of the sort happened. On the contrary, not only did photography not "kill" traditional art, it enriched it. With the ability to capture a specific moment on film, artists studied specific elements of photographs, falling light, the silhouettes of people in motion, and used the knowledge they gained to better their works. The invention of photography also forced people to depict reality a little more creatively and abstractly. After all, an "ordinary" landscape was now much better rendered in photographs. Thus, new genres were created in art, having in mind an alternative way of depicting reality.

On the other hand, photography itself has become a separate field of art. It could be said that each of us can press the shutter button, yet not all of us are famous, respected photographers. Maybe that's because the art of photography is about more than learning how to use a camera, choosing an aperture, and other parameters. It's about a kind of atmosphere of a photo, its composition, the ability to capture certain emotions, a particular moment, light, or the state of the world that captures the photographer's intention. So can we say that AI image generators will simply be a new tool in the hands of artists, just like the camera in the hands of photographers?

Applying the AI art in real life - an ease or a threat

I asked Janusz if, as a Product Designer with many years of creative work experience, he sees the potential for applying AI generators to the daily work of his profession. It turns out that this is a very broad field for further growth, and three key areas in which we could start using AI art today are:

I asked Janusz if, as a Product Designer with many years of creative work experience, he sees the potential for applying AI generators to the daily work of his profession. It turns out that this is a very broad field for further growth, and three key areas in which we could start using AI art today are:

-

Design - support the development of new concepts and give a completely fresh look at the design of standard products, unconstrained by learned rules

-

Animation - the optimization of design processes, a significant reduction in the time needed to produce a given animation, for example, by manually styling one frame and using AI to make up the remaining frames

-

Architecture - inspiration for the design of buildings and spaces, sometimes based on seemingly abstract concepts, such as mushroom structure in buildings, coral reef textured walls, etc.

We may think that since the AI generator is able to design us a new shoe, with a design never seen before on the most famous fashion runways, we will instantly stop needing designers. However, if we look at the bigger picture, we will see a number of limitations of such a scenario. A shoe not only has to be beautiful, amazing and innovative in appearance, it would be nice if it could be put on a foot. We need to find a material from which such a shoe could be made. In fact, we need to figure out how to transfer the cosmic vision to a shoemaker's table and physically make such an object. We can cite even more aspects stemming from the real world for architectural design. For instance, the building must meet a number of standards, the materials must be durable, our homes must be able to withstand various weather conditions, and so on. After all, we can’t make a house with walls like a coral reef sponge, full of holes, because we will simply freeze in the winter. All this makes people such as designers, planners and architects irreplaceable.

What's next?

As always, the question remains - what's next? Of the many possible paths forward, we can distinguish two extreme scenarios:

As always, the question remains - what's next? Of the many possible paths forward, we can distinguish two extreme scenarios:

-

Exploiting the potential of AI as much as possible and the "mass production" of new ideas.

-

Rejecting AI-generated ideas, returning to the values of Arts and Crafts, appreciating products produced only by human imagination.

Personally, I think we will be somewhere between these two extreme scenarios in the coming years. I'm sure that big companies involved in fashion, design, gadgets or hardware design will not miss the opportunities (and therefore money) that come from including AI image generators in their creative process. On the other hand, I believe that hand made crafts created by a specific person have their own special value and that there are many people who share my opinion. I very much hope that we will go in the direction of diversity and the symbiosis of human creativity as well as that supported by AI, so that everyone will find something in accordance with their expectations and beliefs.

I encourage you to have a discussion on AI art among your friends and consider whether it is a booster of human creativity or the opposite, as it is a fascinating topic full of interesting facts. After all, who knows, maybe the next MasterBorn blog post will be written and illustrated by an artificial intelligence?

Table of Content

- AI painting generators as a computer vision application

- How is it possible for AI to generate completely new images?

- What can go wrong? Threats lurking in the data

- Ongoing efforts to make AI systems secured

- Breakthrough in image generation - Generative Adversarial Networks

- How to encourage neural networks to create art?

- Step by step to AI generated art

- How do today's text-to-image models work?

- 3 AI image generators worth your attention

- Midjourney

- DALL-E 2

- Stable Diffusion

- Creativity or plagiarism

- Applying the AI art in real life - an ease or a threat

- What's next?

World-class React & Node.js experts

Related articles:

The 10 Top Innovative Sports Tech Startups You Should Know

Introducing the 10 game-changing Sports Tech startups. Dive into AI-driven coaching, real-time analytics, and innovative solutions reshaping the industry!

8 Must-Have Soft Skills for Product Designers

Discover the hidden power of soft skills in product design. Learn how translating ideas and understanding business values can elevate your career.

38 Tech Terms You Need to Know as a Start-up Founder

As a non-technical founder or CEO it’s essential for you to know some terms and concepts to catch up with your engineers.